Bloomberg's Mercedes EQS review shows how dangerous AI summaries can be

Something called "Bloomberg AI" confidently overstated the range of the Mercedes-Benz EQS 450+ by 127 miles. Welcome to the future.

published Jun 15, 2025 | updated Jul 16, 2025

Key Takeaways

- According to Bloomberg's AI summary, the 2025 Mercedes-Benz EQS 450+ has 127 more miles of range than it actually does.

- The danger of unvetted AI summaries is that only subject-matter experts — a small slice of humanity in any given area — can tell when they're wrong.

When a friend sent me Bloomberg's review of the 2025 Mercedes-Benz EQS 450+ yesterday (paywalled, sorry), my car-addled brain executed its usual automatic data query:

Right, that's the long-range one with rear-wheel drive. Not Lucid-long, but not bad for a German.

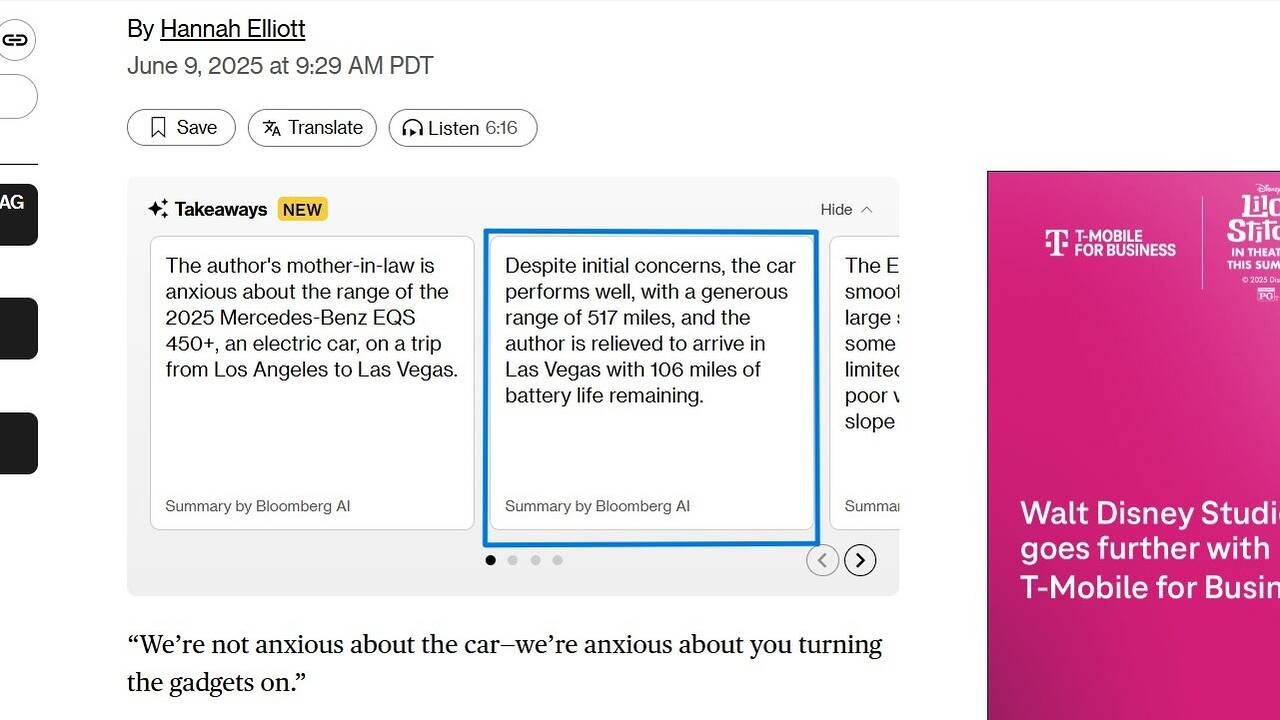

Then I opened the article to find a "Takeaways" module right at the top, adorned with that kind of starry, mini-constellation graphic we've quickly come to associate with AI. The takeaways were provided in card form, and the second one caught my eye. Check out the blue box below.

You can imagine my reaction to that 517-mile figure, which is five miles clear of the 2025 Lucid Air Grand Touring — the longest-range electric vehicle on the market. Did I somehow miss the fact that the 2025 EQS 450+ suddenly has the most range of any EV?!

I checked MotorDonkey's own data, which confirmed my initial recollection of this model's standing: 390 miles, well below Lucid's level and also 20 miles shy of the Tesla Model S. I double-checked on the EPA's website: yep, 390 miles.

Okay. So what the heck was "Bloomberg AI" talking about?

Caveat lector — let the reader beware...if possible

It was time to read the article itself, wherein I found it written: "When we pulled out of town, a dashboard icon [emphasis added] said the car had 517 miles...We made it to Las Vegas with 106 miles."

Now, this outcome makes perfect sense in light of both the 390-mile EPA range estimate and the verified high-speed efficiency of the EQS 450+. While a lot of EVs have been observed to shed miles rapidly around 70 mph or above, this particular Benz isn't one of them, having racked up 400 miles at 75 mph in a 2022 real-world test. So yeah, if you're starting in LA with 390 miles of range, you're gonna get to Vegas — a highway-heavy journey of 275 miles — with about 100 miles left, give or take a handful.

But here's the thing: that official 390-mile range estimate isn't mentioned anywhere in the article, despite being possibly the most important fact about the car. The only range estimates cited by the author were generated by the EQS, including the fateful 517-mile projection.

That put Bloomberg AI — tasked with confidently summarizing whatever article it's being fed, but evidently lacking the Captain Obvious-grade awareness that a "dashboard icon" may not be a credible source — in a tough spot.

And what came out the other end? A steaming pile of virtual cow manure that inflates the Benz's actual range by 33 percent:

"The car performs well, with a generous range of 517 miles."

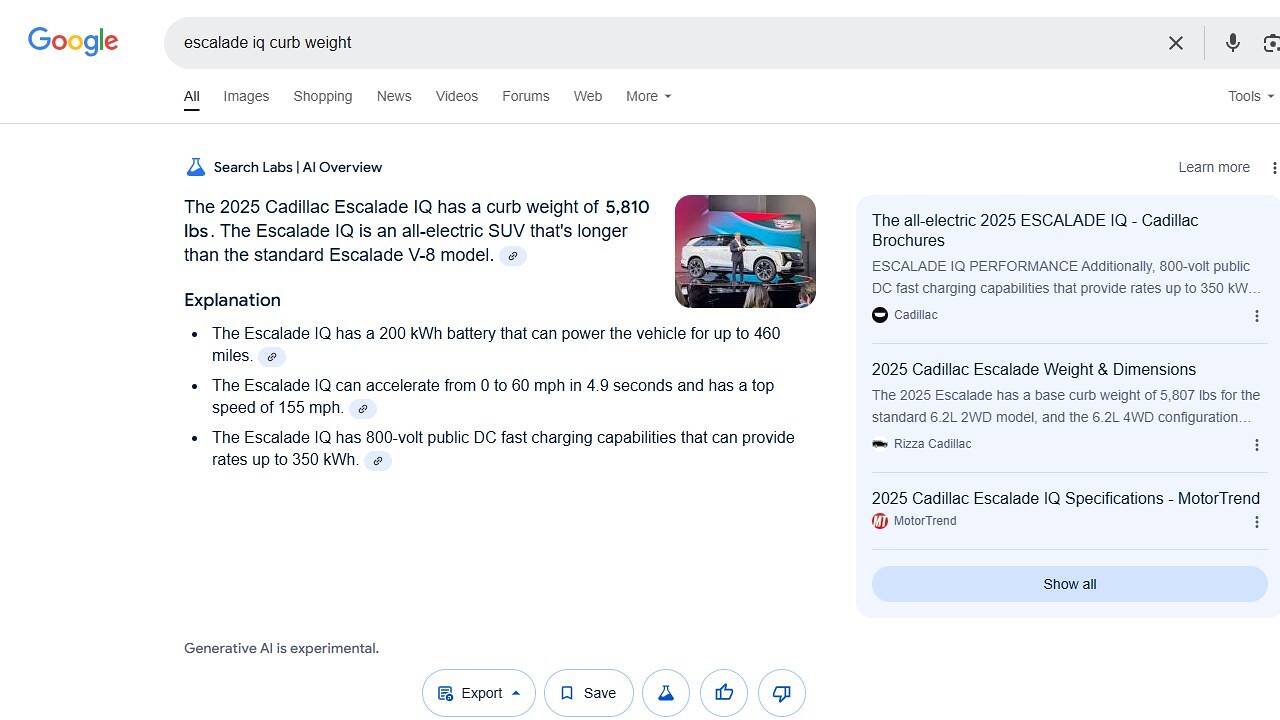

I've seen this kind of error countless times while searching for car information, shaking my head at Google's AI Overviews as the machine keeps trying to make connections that just aren't there. My favorite example is when I searched for "escalade iq curb weight" back in January, wanting to know how heavy Cadillac's new electric Escalade would be. At the time, no such data was available from any source, so a decent answer would have been, "We don't know yet, but given that the related GMC Hummer EV weighs about 9,000 pounds, the Escalade IQ will likely end up in that neighborhood." But the AI Overview really wanted to give me a factual response, so it said the IQ weighed 5,810 pounds, which is the approximate curb weight of a regular rear-drive Escalade with the V8 gas engine.

For the record, the Escalade IQ weighed in at 9,134 pounds on MotorTrend's scales in March. Also, take a look at that second bullet point — a 155-mph Escalade IQ is only slightly more plausible than a three-ton one.

Please, don't get me wrong on this. Generative AI is clearly amazingly good at some things, such as debugging thousands of lines of code in a few seconds and creating silly images on demand. I use it regularly myself for these purposes.

But specifically with regard to generative AI text summaries, the combination of (1) being programmed to give authoritative answers, and (2) having to work with imperfect or conflicting information, perpetually threatens to result in (3) straight-up manure.

Unlike real manure, though, not everyone will notice that it stinks. If you're reading Bloomberg's EQS 450+ review as a non-expert, all you've got to go on is the AI summary's reassuringly assertive tone, plus the fact that a major publisher has decided to make it the first thing you see. You may well just take it at face value, and now you'll be moving forward with a very wrong idea in your head.

MotorDonkey says

The danger, of course, is that most of us aren't experts in most areas, so we're generally not equipped with the knowledge to recognize when AI has gone astray.

In the case of the EQS 450+, we can agree that the stakes are relatively low: society won't suffer overmuch because a bunch of Bloomberg readers now believe that this random EV has a lot more range than it actually does.

But what if Bloomberg AI were tasked with summarizing an article about the recent anti-ICE protests in Los Angeles, for example? How big of an area was affected? What roles were played by the National Guard and the Marines? Now we're talking about details that really do matter if we're going to have a shared understanding of reality, which seems like a prerequisite for a healthy democracy.

I'm happy to let AI debug my code or cook up ridiculous images that I send to my friends, but we should be leery of turning over the crucial job of summarizing information to error-prone machines that sound right even when they're wrong. Nonetheless, the publishing world seems to be rushing headlong toward an AI-summarized future in which human fact-checkers are increasingly marginalized or absent altogether. That may be good for certain companies' bottom lines, but it doesn't bode well for the broader project of disseminating truth, which needs all the help it can get. ⛐ md

by Josh Sadlier

Publisher and Donkey-in-Chief

Josh has been reviewing cars professionally since joining Edmunds.com fresh out of grad school in 2008. Prior to founding MotorDonkey, he spent 15 years shaping Edmunds' expert automotive content in various capacities, starting as an associate editor and ultimately serving as a senior editor before wrapping up with a five-year term as the company's first-ever director of content strategy. Josh is a card-carrying member of the Motor Press Guild and a lifelong car nut who has driven, compared and critiqued thousands of cars in his career. Helping people find their perfect car never gets old—seriously!

Let's make it official!

Let's make it official!

Be a good donkey and we'll deliver delicious car news straight to your inbox, spam-free forever.